Noodleman

Member-

Posts

728 -

Joined

-

Last visited

-

Days Won

28

Everything posted by Noodleman

-

it could still be building cache.. try it several times. it might just work once cache is fully built. check your server error log, it should have the reason for the white screen logged.

-

rebuild your image library. filemanager -> udpate file list, should solve it

-

It's a server setting, you need to ask your hosting company to change the setting for you or if allowed, change it yourself

-

this? https://github.com/cubecart/v6/issues/1801

-

it's fixed. Issue was the upgrade from v4 ported several thousand cached folders/images from the magictoolbox module to images/source. these are not being used, but killed the performance. moved them out of source, rebuilt image library... instant page loads.

-

If you are willing to share FTP & admin area login details with me privately, I can look at this for you... shouldn't take long to fix

-

There is no free extension available to do this, but I do have a commercial module which does this very well. As far as I am aware it's the only dynamic filter module available for CubeCart. It's a very powerful solution as it also offers dynamic menus based on your product attributes as an optional feature. You can see it in use here: https://www.doorsandfloors.co.uk/ https://www.cubecart.com/extensions/plugins/product-filters-filter-categories-and-build-menus-dynamically-using-product-metadata all my modules come with a no-obligation trial period, you can test it out and see how it works or if it's suitable for your requirements. if a week isn't long enough open a ticket via my support portal and I can offer extended demo periods.

- 1 reply

-

- cubecart v6

- question

-

(and 2 more)

Tagged with:

-

Evening, Would like to give the developer of the module a chance to reply, but wanted to add that if you get stuck with getting this resolved then let me know, depending on what you need I probably have something already in my toolbox. I would recommend contact the developer via their support services in the first instance as I'm assuming no warrant means to data impacted by the module rather than the module itself.

-

https://github.com/cubecart/v6/issues/1771

-

can pretty much guarentee it's a long running query then. did you check SQL queries? Is it slow on all pages?

-

check for long running SQL queries... could be a sign of indexes not correct. note that I've not seen the "debug" output fro CubeCart every correctly report SQl query times, so you may want to check manually Also, if you have a large store there could be caching of images ongoing which can murder performance until it's done. quick way to check is go to the filemanager. if you can load it, not caching. when you did your upgrade, did you clean up all the old modules? they won't work in v6 so deleting them all is a good idea. I've sen conflicts caused by these in the past. What modules do you have installed?

-

manually integrating VoguePay payment Gateway into cubeCart

Noodleman replied to Hakeem Salimon's topic in Technical Help

to do it correctly you will need to produce a gateway module.- 2 replies

-

- cubecart v6

- extension

-

(and 2 more)

Tagged with:

-

these are all features on my roadmap, including an additional audit trail module and overlap with my custom order status module, as well as statistical stock information and forecasting.

-

I've also got this on my R&D roadmap for a future module which will offer a variety of features, but it won't be available for quite a while.

-

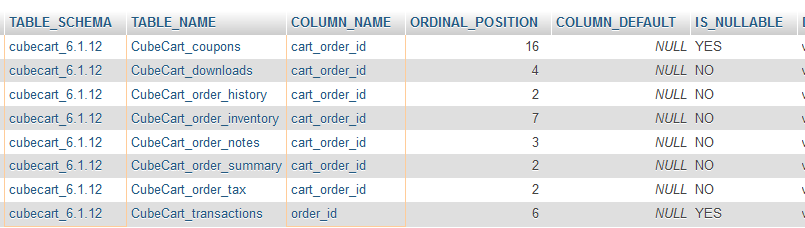

From one of my test stores. SELECT * FROM INFORMATION_SCHEMA.COLUMNS WHERE COLUMN_NAME LIKE '%order_id%' AND TABLE_SCHEMA = 'cubecart_6.1.12'

-

$this->_order_id = 'OFD'.date('ymd-His-').rand(1000, 9999); changes need to be applied manually in the DB, easy to do using PHPMyAdmin, change the max length to whatever is required. ensure you make sure the change is the same for all fields otherwise it can impact index performance

-

class.order.php function createOrderId change the logic of the function to meet your own order number scheme, and change ALL DB columns that store the order nuber if your scheme will exceed current limits.

-

reasonably easy to do, but you also need to increase the field size in the DB to accomodate longer values,

-

are you using the Minimum Order Values plugin?

-

Drop me a message or raise a ticket via my support portal that oulines the specification of your requirements and I can review for you https://www.noodleman.co.uk/support

-

I wrote an API for CubeCart which I use for customer projects along these lines. the API adds a framework which can be used to build the integrations as required. However, if you are just looking for a referral, then you should look into affiliate networks

-

there could be a requirement to add some indexes to the database tables the module uses which will boost performance. This shouldn't be impacted by cache and is likely a database level performance issue. If you are willing, I can look directly at the issue for you, review the SQL queries and tune the tables via indexes as required to check if performance improves. Alternatively, I can provide some SQL queries to run, and if you share the results with me I can recommend indexes to add on specific tables.

-

this can be done if you wish.. entirely possible within your store theme to setup. the copy/paste code samples are considered to be a generic starting point which can be modified as required to suit your own requirements.

-

I don't offer a formal tiered discount, but I am sure we can work something out over a PM or a support ticket.

-

my module does not have an interface to allow import of a file, however you could import data directly into the database table where manual related products are stored. The table is called "CubeCart_related_prods" and you simply need to add the product ID of the parent item, then the ID's of the related items. you can define an sort order as well. You can use an application such as Navicat to quickly import data into the DB from a file